In contrast to extractive summarization, where a summary is composed of a subset of sentences or words lifted from the input text as is, abstractive summarization requires the generative ability to rephrase and restructure sentences to compose a coherent and concise summary. Learning to produce good abstractive summaries is a difficult task that requires both learning how to extract meaningful content from documents, but also how to communicate it concisely.

Because documents and summaries are different ways of communicating information, however, they have varying levels of detail, indicating unique latent discourse properties for both pieces of text. We explore modeling architectures that learn to handle these latent discourse properties, thereby learning to select more salient information from the document and express it more clearly and concisely on the decoder side.

Associated Publications

Asli Çelikyilmaz, Antoine Bosselut, Xiaodong He, Yejin Choi (2018). Deep Communicating Agents for Abstractive Summarization. Proceedings of the 16th Annual Meeting of the North American Association for Computational Linguistics (NAACL).

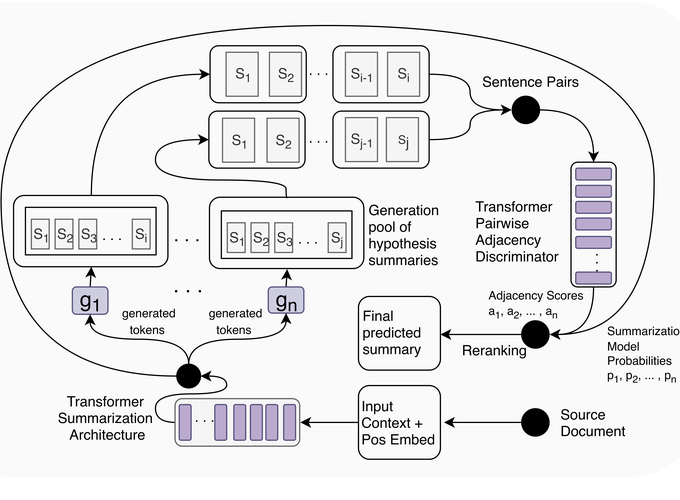

Saadia Gabriel, Antoine Bosselut, Ari Holtzman, Kyle Lo, Asli Çelikyilmaz, Yejin Choi (2019). Cooperative Generator-Discriminator Networks for Abstractive Summarization with Narrative Flow. ArXiv: 1907.01272.

Andrew Hoang, Antoine Bosselut, Asli Çelikyilmaz, Yejin Choi (2019). Efficient Adaptation of Pretrained Transformers for Abstractive Summarization. arXiv: 1906.00138.