Modern neural language generation models often produce natural language that is generic and repetitive, and even self-contradictory and incoherent, particularly as the length of the sequence increases. This is in part due to the objective function optimized by language models, word-by-word negative loglikelihood, which is not expressive enough to capture global communicative goals. Therefore, common language sequences that can be unrelated to the generation goal are often produced at the expense of sequences that may be more complex (and therefore hard to generate), but that better convey the initial goal of communication.

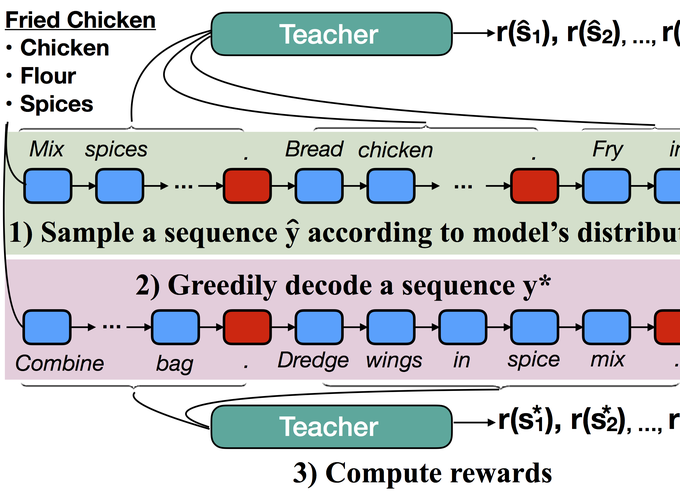

My teammates and I have worked on integrating discriminator methods into generation pipelines. These discriminators provide additional scoring functions that can be used either at training or evaluation time to bias generators toward producing more coherent sequences. Across wide varieties of text generation tasks: recipes (Bosselut et al., 2018), summarization (Gabriel et al., 2019), reviews (Holtzman et al., 2018), and story endings (Holtzman et al., 2018), our methods have produced higher quality summaries than comparable baselines that only use local maximum likelihood estimation as an objective.

Associated Publications

Antoine Bosselut, Asli Çelikyilmaz, Xiaodong He, Jianfeng Gao, Po-Sen Huang, Yejin Choi (2018). Discourse-Aware Neural Rewards for Coherent Text Generation Proceedings of the 16th Annual Meeting of the North American Association for Computational Linguistics (NAACL).

Saadia Gabriel, Antoine Bosselut, Ari Holtzman, Kyle Lo, Asli Çelikyilmaz, Yejin Choi (2019). Cooperative Generator-Discriminator Networks for Abstractive Summarization with Narrative Flow. ArXiv: 1907.01272.

Ari Holtzman, Jan Buys, Maxwell Forbes, Antoine Bosselut, David Golub, Yejin Choi (2018). Learning to Write with Cooperative Discriminators. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL).