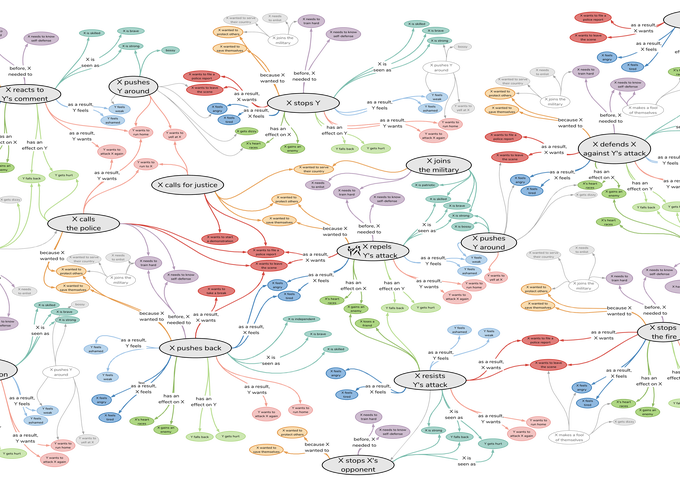

When reading text, humans make commonsense inferences that frame their understanding of the narrative being presented. For machines to achieve this capability, they must be able to acquire relevant and correct commonsense for an unbounded set of situations. We cast the problem of commonsense acquisition as knowledge base construction and investigate whether large-scale language models can effectively learn to generate the knowledge necessary to automatically construct a commonsense knowledge base.

While plenty of approaches exist for knowledge base construction and completion, commonsense displays interesting properties that make it difficult to apply typical solutions to it. First, commonsense knowledge does not cleanly fit into a schema comparing two entities with a known relation. A natural language phrase such as “going to the mall” has different commonsense properties than just “mall” requiring more expressive representations than atomic entities. Second, commonsense knowledge is often unmentioned in text, making it difficult to extract directly, as information extraction approaches would seek to do.

Large-scale language models provide an opportunity for a new approach, however. Over the course of reading billions of sentences of text, these models learn latent relationships between concepts in text. By fine-tuning these models on a small set of seed tuples to learn the desired relations and structure of a knowledge base, they can be adapted to produce a wide variety of commonsense knowledge tuples, some undiscoverable directly in text using extractive methods.

Associated Publications

Jeff Da, Ronan Le Bras, Ximing Lu, Yejin Choi, Antoine Bosselut (2021). Understanding Few-Shot Commonsense Knowledge Models. ArXiv: 2101.00297

Jena D. Hwang, Chandra Bhagavatula, Ronan Le Bras, Jeff Da, Keisuke Sakaguchi, Antoine Bosselut, Yejin Choi (2021). (Comet-)Atomic 2020: On Symbolic and Neural Commonsense Knowledge Graphs. Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI).

Antoine Bosselut, Ronan Le Bras, Yejin Choi (2021). Dynamic Knowledge Graph Construction for Zero-shot Commonsense Question Answering. Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI).

Antoine Bosselut, Hannah Rashkin, Maarten Sap, Chaitanya Malaviya, Asli Çelikyilmaz, Yejin Choi (2019). COMET: Commonsense Transformers for Automatic Knowledge Graph Construction. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL).

Chaitanya Malaviya, Chandra Bhagavatula, Antoine Bosselut, Yejin Choi (2020). Exploiting Structural and Semantic Context for Commonsense Knowledge Base Completion. Proceedings of the 34th AAAI Conference on Artificial Intelligence (AAAI).

Resources

COMET Demo

ATOMIC Knowledge Graph

ConceptNet Knowledge Graph

ConceptNet subset used in our work